Message Passing Programming

MPI modes

Different Send

Full form

Synchronous Send

Buffered Send

standard Send

Synchronous?

Guaranteed to be synchronous

Guaranteed to be asynchronous

may be both

Behavior

Routine will not return until message has been delivered.

Routine immediately returns.

Bsend when buffer is not full, Ssend when buffer is full.

Impact

May result in forever waiting.

System helps copy data into a buffer and sends it later on.

Unlikely to fail.

To elaborate:

Ssendwill wait until the receiver callsRecv, therefore it will waste the waiting time and even result in forever waiting (deadlock) if the receiver does not call.Can be used to debug the deadlock situation by replacing

SendwithSsend.

Bsendwill copy values into system buffer, and returns immediately, the sending data can be overwritten after that. When the receiver callsRecv, system will send the data in the buffer to the receiver.If

Recvis called earlier than theBsend, sinceRecvis always synchronous, the receiver will be blocked in this function untilBsendis issued.User should provide enought buffer space in

Bsendby usingMPI_Buffer_attach.If there is not enough space,

Bsendwill FAIL.Not encouraged to use, because when it is not feasible to correctly compute the amount of buffer that is needed, it will cause unrecoverable error. Ref

Also Noted:

BSendis different from non-blocking Send (i.e. Isend).

MPI_Sendtries to solve problems above by:Allocate buffer space by system.

Behave Asynchronous when buffer has space, behave Synchronous when buffer is full.

Corner case of

MPI_Send:

Process A and Process B both run

Send() & Recv()to the other, will deadlock if anyone'sSendbecomes synchronous. Correct way to avoid deadlock: either match sends and receives explicitly instead of determining by system. e.g. ping-pong send&recv.

MPI_Probe

For checking whether the messages have arrived, use

MPI_Probe. The usage is the same asMPI_Recvexcept no receive buffer specified.int MPI_Probe(int source, int tag, MPI_Comm comm, MPI_Status * status)in MPICH.Status is set as if the receive took place, it contains count, cancelled, MPI_SOURCE, MPI_TAG, MPI_ERROR.

Be careful when using wildcards like MPI_ANY_SOURCE to probe message, SHOULD specify the source rank when receiving messages by

MPI_Recv(buffer, count, datatype, probe_status.MPI_SOURCE, ...)Noted: In OpenMPI 4.1.1 version, the count and cancelled values are used inside, users are not supposed to fetch it, it will not return what users actually want.

Tag

Every message can have a Tag

non-negative integer value

maximum value can be queried using MPI_TAG_UB attribute

MPI guarantees to support tags of at least 32767

Most programs set tag to zero for convenience.

Can be useful for filtering messages, choosing to receive messages with specific tag. Also in Debugging, always tag messages with the rank of the sender.

Most commonly use the wildcards MPI_ANY_TAG, which means accept all messages regardless of the tag, and later finds out the actual tag value by looking at the

MPI_Status.

Communicator

All MPI communications take place within a communicator.

a communicator is a group of processes.

Commonly use the pre-defined communicator: MPI_COMM_WORLD which involves ALL processes.

MPI_COMM_SELF contains only one self process in contrast.

Communicator has no wildcards, messages can only be transferred in one comm.

int MPI_Comm_split(MPI_Comm comm, int color, int key, MPI_Comm *newcomm)in MPICHcolordecides the sub-communicator,keydecides the new rank in new comm.Algorithm:

Use MPI_Allgather to get the color and key from each process

Count the number of processes with the same color; create a communicator with that many processes. If this process has

MPI_UNDEFINEDas the color, create a process with a single member.Use key to order the ranks

int MPI_Comm_dup(MPI_Comm comm, MPI_Comm * newcomm)in MPICH can duplicate an existing communicator with all its cached information (not limited to MPI_COMM_WORLD).Enables processes to communicate with each other safely within a piece of code

Essential for people writing parallel libraries (e.g. FFT) to avoid library messages becoming mixed up with user messages.

Isolation guaranteed by communicator is stronger than Tag value because of the wildcard of latter one.

Bad practice to use MPI_COMM_WORLD explicitly in the routines, better using a variable which allows for greater flexibility later on. e.g.

Traffic Flow

Definition:

divide road into a series of cells

either occupied or unoccupied

perform a number of steps

each step, cars move forward if space ahead is empty

Circle road: if a car moves off the right, it appears on the left.

i.e. identity cell N+1 with cell 1, and cell 0 with cell N

Cell Update Rule:

If old(i) = 0, it means cell i is empty, the next status is decided by whether cell i-1 has car.

0

0

0

1

1

1

If old(i) = 1, it means cell i is not empty, the next status is decided by whether it can move forward to cell i+1 if cell i+1 is empty.

0

0

1

1

0

1

To conclude, it can be represented as below in mathematics: $new_i = (old_i&old_{i+1}) | ((!old_i)&old_{i-1})$

Solve

Split the data into nprocs, initialize at rank 0 and send to other ranks.

In every iteration:

Recv old[0] from last rank old[n]

Recv old[n+1] from next rank old[1]

Send old[1] to last rank old[n+1]

Send old[n] to next rank old[0]

Swap the Send and Recv order according to the odd-even of rank to avoid deadlock.

Sum reduce the move count of each rank to master rank.

Result:

serial-1

113.23

955.58

2

140.44

142.75

4

276.09

1811.29

8

530.58

1856.21

16

1012.64

1842.6

32

1496.01

1495.96

48

2008.33

1939.41

Interesting Found:

Only apply OpenMP can achieve satisfying result as well, from 113 to 955.58.

MPI+OpenMP does not perform well when nprocs is 2 and 32.

Apart from nprocs is 2 and 32, other MPI+OpenMP combinations can reach similar performance.

Is

./trafficthe same asmpirun -n 1 traffic(the same binary file) ?./trafficis much faster thanmpirun -n 1 traffic, MCOPs 989.14 compared to 110.28, maybe because getting rid of MPI_Env setup?

Message-Passing Strategy

Broadcast data to 2 processes

Split calculation between 2 processes

Globally resynchronize all data after each move

Analogy: In deep learning, it is similar to data parallelism. In data parallelism, each worker holds an entire copy of model and trains different data. After each epoch, all workers share the weight learned from its part of data.

Parallelisation Strategy

Scatter data between 2 processes: distributed data strategy

Internal cells can be updated independently.

Must communicate with neighboring processes to update the edge cells.

Sum local number of moves on each process to obtain total number of moves at each iteration.

Analogy: In deep learning, it is similar to model parallelism. In model parallelism, each worker has a part of model and need to pass the boundary value to the next partial model to pipeline the whole training.

Non-Blocking Communications

Difference:

synchronous / asynchronous is to describe the relation between two modules. blocking / non-blocking is to describe the situation of one module. Reference here

Synchronous mode affects completion, while blocking-mode affects initialization.

Non-blocking calls return straight away and allow the sub-program to continue to perform other work. At some later time the sub-program can test or wait for the completion of the non-blocking operation.

All non-blocking operations should have matching wait operations. Some systems cannot free resources until wait has been called.

A non-blocking operation immediately followed by a matching wait is equivalent to a blocking operation.

Non-blocking operations are not the same as sequential subroutine calls as the operation continues after the call has returned (in background).

Separate communication into three phases:

Initiate non-blocking communication.

Do some other works.

Wait for the non-blocking communication to complete.

Send

Related functions matrix (Link to MPICH):

Receive

int MPI_Irecv(void *buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Request *request) in MPICH

Send and receive can be blocking or non-blocking.

A blocking send can be used with a non-blocking receive, and vice-versa.

Test & Wait

MPI_Ixxx will return a request handle, which can be used for future detect, by Test or Wait.

Related functions matrix (Link to MPICH):

SendRecv combination

int MPI_Sendrecv(const void *sendbuf, int sendcount, MPI_Datatype sendtype, int dest, int sendtag, void *recvbuf, int recvcount, MPI_Datatype recvtype, int source, int recvtag, MPI_Comm comm, MPI_Status *status) in MPICH

Specify all send/receive arguments in one call

MPI implementation avoids deadlock

useful in simple pairwise communication patterns, but not as generally applicable as non-blocking.

Possible implementations:

determine the send and recv sequence by the rank, e.g. ping

Use Non-blocking mode and Wait later. e.g.

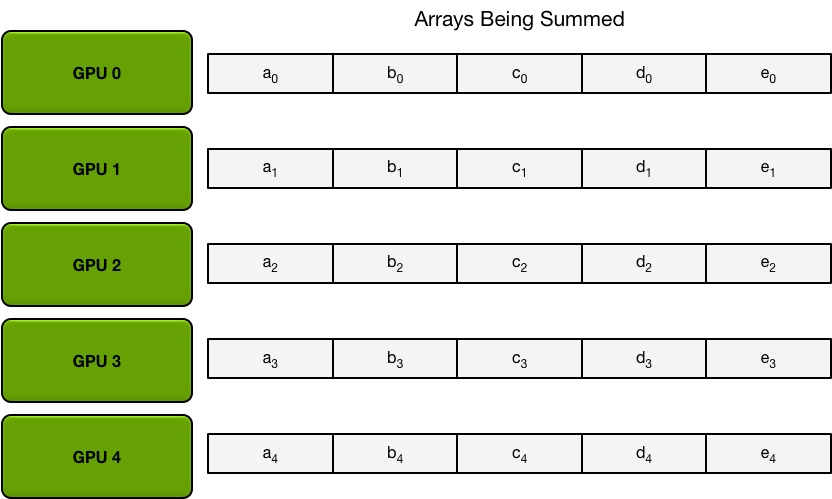

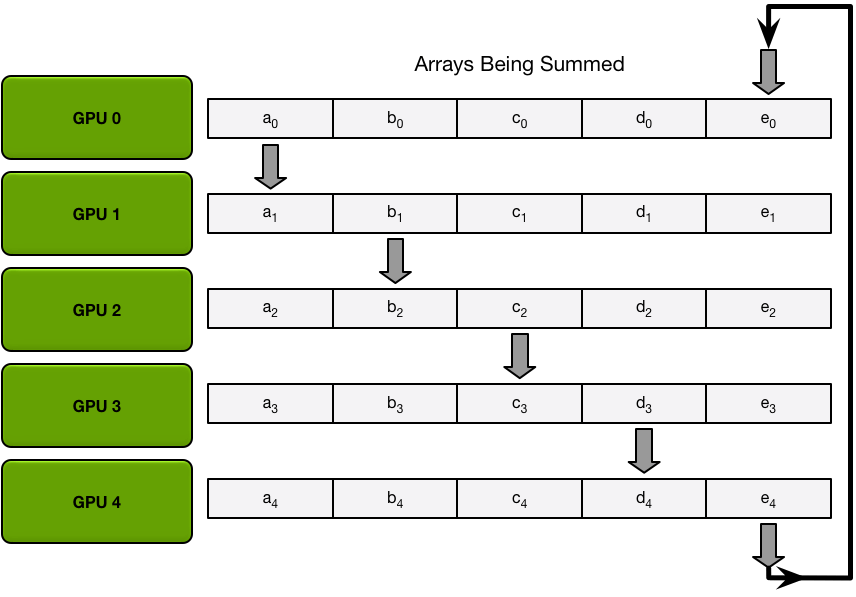

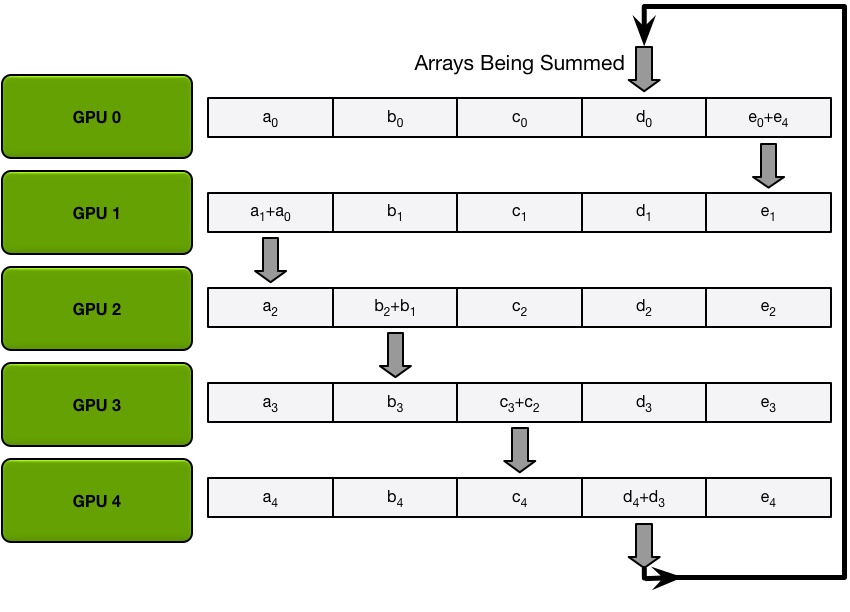

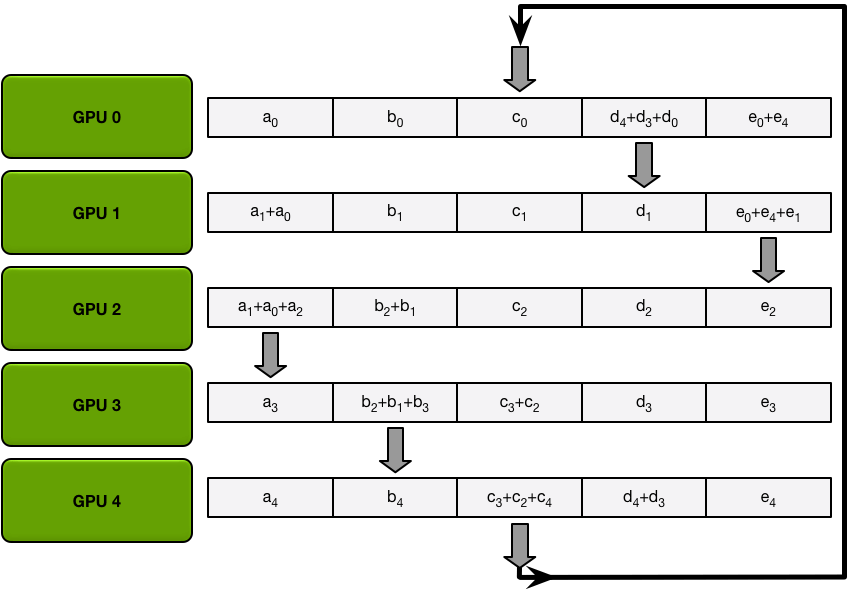

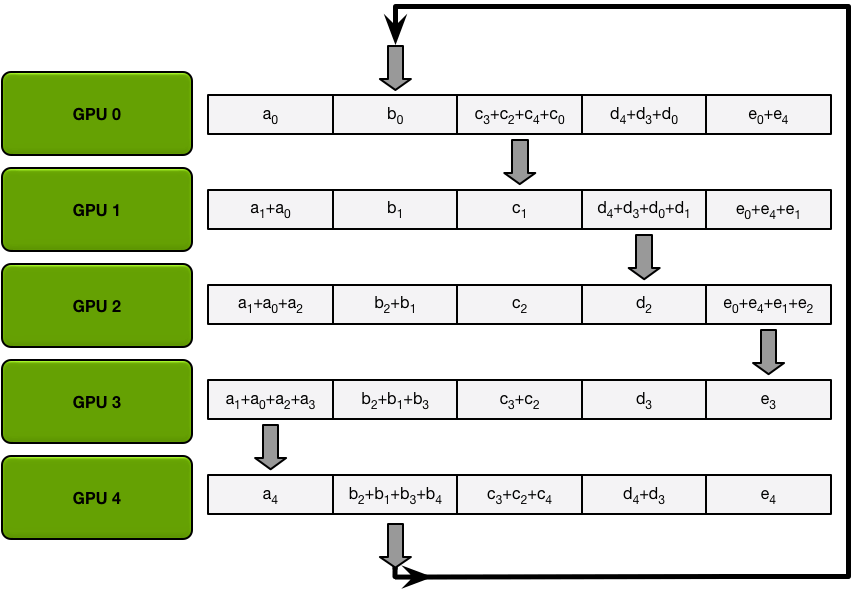

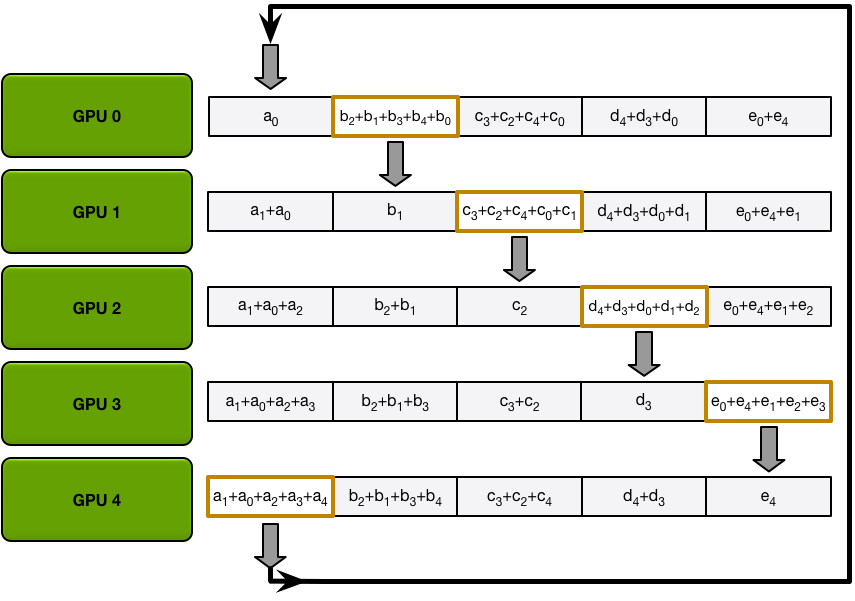

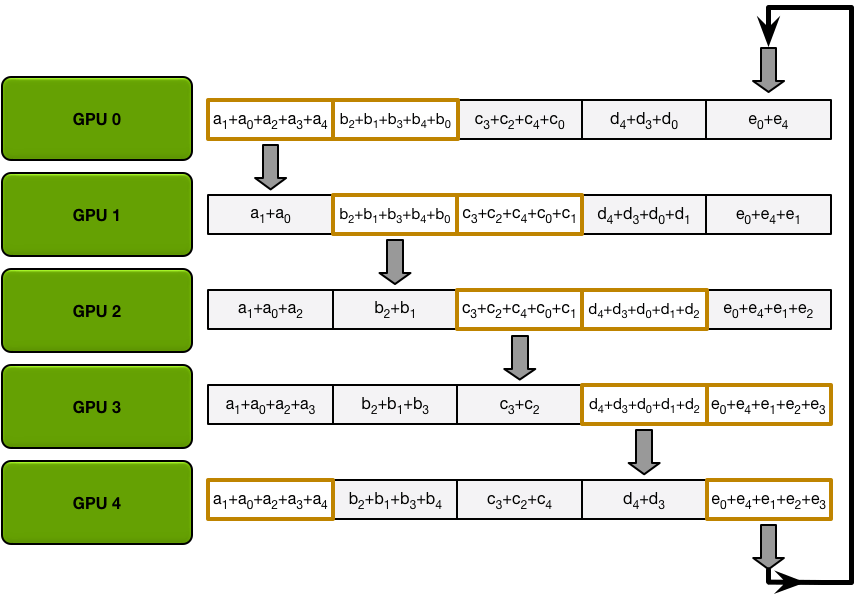

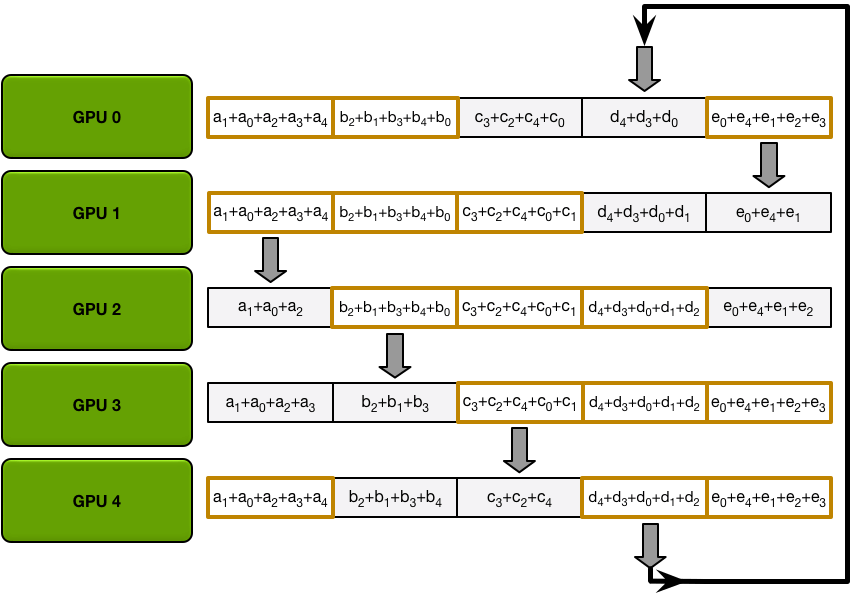

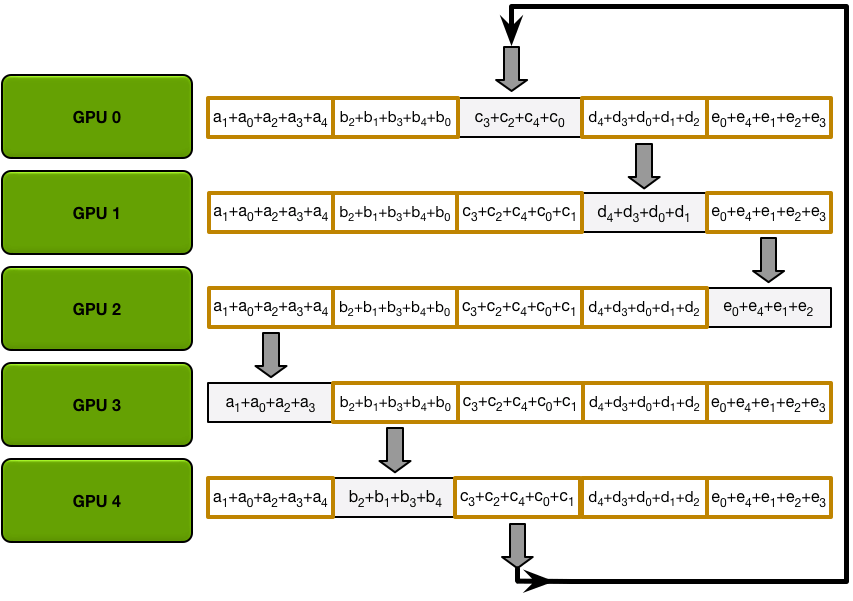

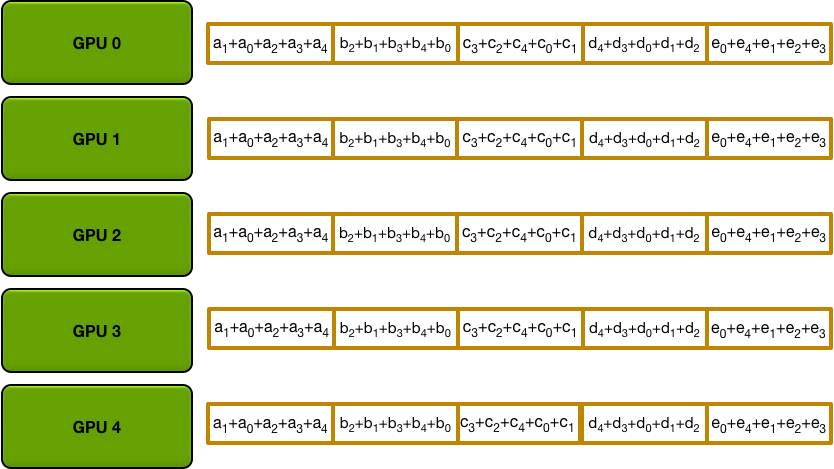

Send and recv as a ring situation: In deep learning, the AllReduce reduction can be achieved by Ring AllReduce, each worker receives a partial from the backward neighbor and sends a different partial to the forward neighbor. After N times, all workers have accumulated a fixed partial from all other workers, and then broadcast its completed part to others. Details can be seen on this blog.

Last updated